Estimated reading time: 12 minutes

Key Takeaways

- Edge computing processes IoT data locally, reducing latency and bandwidth costs

- Cloud-only IoT architectures face significant challenges with latency, costs, and resilience

- Time-sensitive, safety-critical workloads should run at the edge, not in the cloud

- Protocols like MQTT and OPC UA enable effective edge computing implementation

- Edge orchestration is essential for managing deployments at scale

- Real-world implementations show significant benefits across multiple industries

Table of contents

IoT deployments face a critical turning point. Edge computing for IoT has transformed from a nice-to-have feature into an essential component of any serious IoT architecture. Most systems today remain over-reliant on cloud computing when they should be pushing more logic to the IoT edge. This shift isn’t just a technical preference—it’s now a fundamental requirement for building resilient, cost-effective, and responsive IoT systems.

What is Edge Computing for IoT?

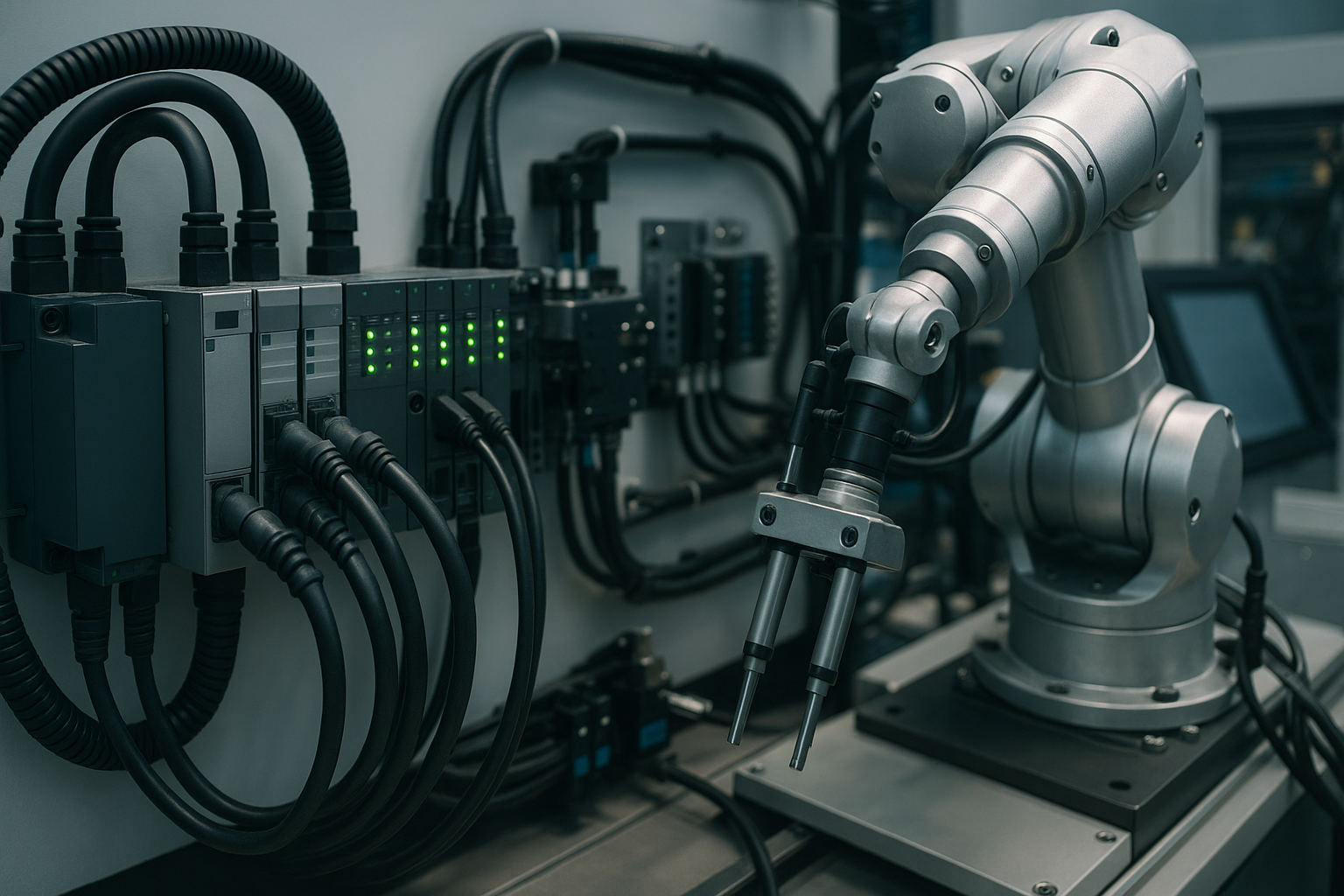

Edge computing for IoT means processing data on or near the devices that generate it, rather than sending everything to distant cloud data centers. This approach provides compute power, storage, and software capabilities close to where data originates, enabling immediate, local decision-making.

The IoT edge consists of the hardware and software components positioned at the boundary between IoT endpoints and the cloud—including gateways, devices, and micro-data centers. These components should handle significantly more processing than they typically do in current architectures.

The combination of IoT and edge computing creates a powerful paradigm for modern applications requiring real-time responses.

Why Cloud-Only IoT Is Problematic

Latency Issues

Cloud round-trips introduce unpredictable delays, often exceeding 300ms. For many IoT applications—like robotic controls, industrial valves, or responsive HVAC systems—these delays are unacceptable. When milliseconds matter, edge computing provides the only viable solution by processing data locally and responding in real-time.

Studies show that edge processing can reduce response times by 95% compared to cloud-only architectures.

Bandwidth Costs

Raw data streaming to the cloud becomes prohibitively expensive at scale. Consider a factory with hundreds of sensors each generating readings every second—continuous cloud uploads quickly become unsustainable from a cost perspective. Edge processing that filters, compresses, and prioritizes data before transmission can reduce bandwidth costs by 70% or more.

Effective edge strategies can transform the economics of large-scale IoT deployments.

Resilience Challenges

Cloud-dependent IoT architectures break down when connectivity fails. If every device requires a working backhaul connection, the entire system becomes vulnerable to network issues. Edge logic enables graceful degradation—devices continue operating, make local decisions, and buffer data for later synchronization when connectivity returns. Intelligent agents at the edge maintain critical functions during outages.

Resilient IoT systems require significant edge computing capabilities to maintain operations during connectivity disruptions.

Data Gravity Reality

Data inherently “wants” to stay local—moving vast amounts to distant clouds works against both physics and economics. Processing data near its source aligns with data gravity principles and delivers faster insights while reducing transmission costs.

Research confirms that processing data where it’s generated significantly improves overall system efficiency.

Edge vs Cloud: Where Workloads Belong

| Workload | Should Run At | Why |

|---|---|---|

| Hard real-time control | Edge | Sub-50ms responses required |

| Safety-critical logic | Edge | Must work without cloud dependency |

| Local automation | Edge | Needs to function during outages |

| Privacy-sensitive tasks | Edge | Keeps sensitive data local |

| Aggregation & reporting | Cloud | Benefits from cross-system views |

| Long-term analytics | Cloud | Requires historical data access |

| Cross-fleet ML training | Cloud | Needs broad data and compute power |

The proper allocation principle is straightforward: anything time-sensitive, safety-critical, or bandwidth-intensive must reside at the edge. Cloud services should complement edge processing, not replace it.

Enabling Technologies

MQTT Protocol

MQTT offers a lightweight publish/subscribe messaging model ideal for bandwidth-constrained IoT deployments. Local broker patterns enable disconnected operation with efficient cloud synchronization when connectivity returns.

MQTT brokers at the edge provide the foundation for resilient IoT communication architectures.

OPC UA Protocol

In industrial settings, OPC UA provides structured data exchange between equipment. A common pattern involves OPC UA devices publishing to edge gateways, which then convert or bridge data to MQTT for broader integration.

Architecting On-Device Processing

Successful edge architectures employ several key patterns:

- Local rules engines that execute business logic without cloud round-trips

- Real-time feature extraction that processes raw data before transmission

- Anomaly detection that flags issues immediately where they occur

- Offline-first data pipelines with buffers, retry logic, and prioritization mechanisms

Advanced edge architectures leverage these patterns to maximize efficiency.

Edge security must be robust but pragmatic, implementing certificate management, least-privilege access, and tenant isolation without making deployments unmanageable.

Edge Orchestration at Scale

Managing edge deployments requires sophisticated orchestration capabilities: workflow optimization is essential for:

- Deployment automation for consistent configuration across device fleets

- Update mechanisms that support reversible, incremental changes

- Health monitoring with watchdogs and circuit breakers for resilience

- Failure management that enables local failover when problems occur

Organizations without edge orchestration aren’t ready for production-scale IoT deployments.

Mature edge orchestration is the foundation for sustainable IoT operations at scale.

Real-World Evidence

Factory Automation

A manufacturing facility implemented edge computing with OPC UA and MQTT protocols, reducing control loop latency from 300ms (cloud-based) to 20ms (edge-local). Bandwidth costs dropped by 70% as only meaningful events—not raw telemetry—required cloud transmission.

Agricultural IoT

Edge-based irrigation controllers maintained watering schedules through three days of network outages, preventing crop damage that would have occurred with cloud-dependent systems. The offline-first design proved essential in rural areas with inconsistent connectivity. Green technology solutions benefit significantly from edge computing capabilities.

Retail Analytics

By implementing on-device video analytics, a retail chain extracted customer traffic patterns locally instead of streaming continuous footage. This reduced bandwidth consumption by 95% while still providing all the insights they needed for business decisions.

Studies show that edge computing delivers substantial ROI across multiple industries.

Addressing Common Counterarguments

“Cloud is Cheaper and Simpler”

While cloud deployments may seem simpler initially, they often hide significant costs in bandwidth, operations, and downtime risk. A total cost of ownership analysis typically reveals edge computing as more economical for data-intensive IoT applications over time.

“We Need Centralized Control”

Modern edge orchestration provides centralized governance without centralizing all processing. You can maintain complete visibility and control while still benefiting from distributed computing.

“Our ML Models Need Cloud Resources”

The optimal approach is “train in cloud, infer at edge.” Use cloud resources for data-intensive model training, then deploy optimized models to edge devices for real-time inference without latency or connectivity dependencies. AI trends increasingly point toward this hybrid approach.

Research demonstrates that edge computing enhances, rather than replaces, cloud capabilities.

Implementation Roadmap

- Inventory and classify current IoT workloads by latency requirements

- Start with high-volume sensors by implementing on-device processing

- Establish edge orchestration for device management at scale

- Instrument key metrics including latency, bandwidth usage, and uptime

- Implement offline capabilities with local data storage and synchronization

Success metrics should include reduced latency (verify sub-50ms responses for control applications), decreased bandwidth costs, improved uptime during connectivity disruptions, and faster development iterations. IoT prototyping and testing should focus on these key metrics.

Conclusion

Cloud computing should augment—never dominate—your IoT strategy. Edge computing for IoT has become the default approach for critical operations, not an optional enhancement. Systems still relying entirely on cloud processing for time-sensitive decisions are increasingly fragile, costly, and technologically outdated.

The path forward is clear: pilot an offline-first, edge-centric approach immediately, implement protocols like MQTT and OPC UA, and establish proper edge orchestration. Your IoT deployments will become more resilient, responsive, and cost-effective—delivering the real-time value that IoT has always promised.

FAQ

Q1: What exactly is edge computing for IoT?

A1: Edge computing for IoT is the practice of processing data on or near the devices that generate it, rather than sending everything to distant cloud data centers. This approach brings compute power, storage, and software capabilities closer to where data originates, enabling immediate, local decision-making with reduced latency and bandwidth requirements.

Q2: Why is cloud-only IoT problematic?

A2: Cloud-only IoT faces four major challenges: high latency (often exceeding 300ms) that’s unacceptable for real-time control applications; expensive bandwidth costs when streaming raw data continuously; poor resilience when connectivity fails; and inefficiency that works against data gravity principles, which favor processing data near its source.

Q3: Which workloads should run at the edge versus in the cloud?

A3: Time-sensitive, safety-critical, or bandwidth-intensive workloads should run at the edge. This includes hard real-time control, safety-critical logic, local automation, and privacy-sensitive tasks. Cloud services are better for workloads that benefit from centralization, like aggregation, reporting, long-term analytics, and cross-fleet machine learning training.

Q4: What technologies enable effective edge computing for IoT?

A4: Key enabling technologies include lightweight messaging protocols like MQTT for efficient data exchange, industrial protocols like OPC UA for structured data in manufacturing environments, local rules engines for on-device processing, real-time feature extraction algorithms, and robust edge orchestration platforms that manage deployment, updates, and monitoring at scale.

Q5: How should organizations implement edge computing in their IoT strategy?

A5: Start by inventorying and classifying current IoT workloads by latency requirements. Implement on-device processing for high-volume sensors first, then establish edge orchestration for device management at scale. Instrument key metrics (latency, bandwidth usage, uptime) and develop offline capabilities with local data storage and synchronization. Focus on creating an offline-first, edge-centric architecture that uses cloud services as a complement, not a requirement.